Overview

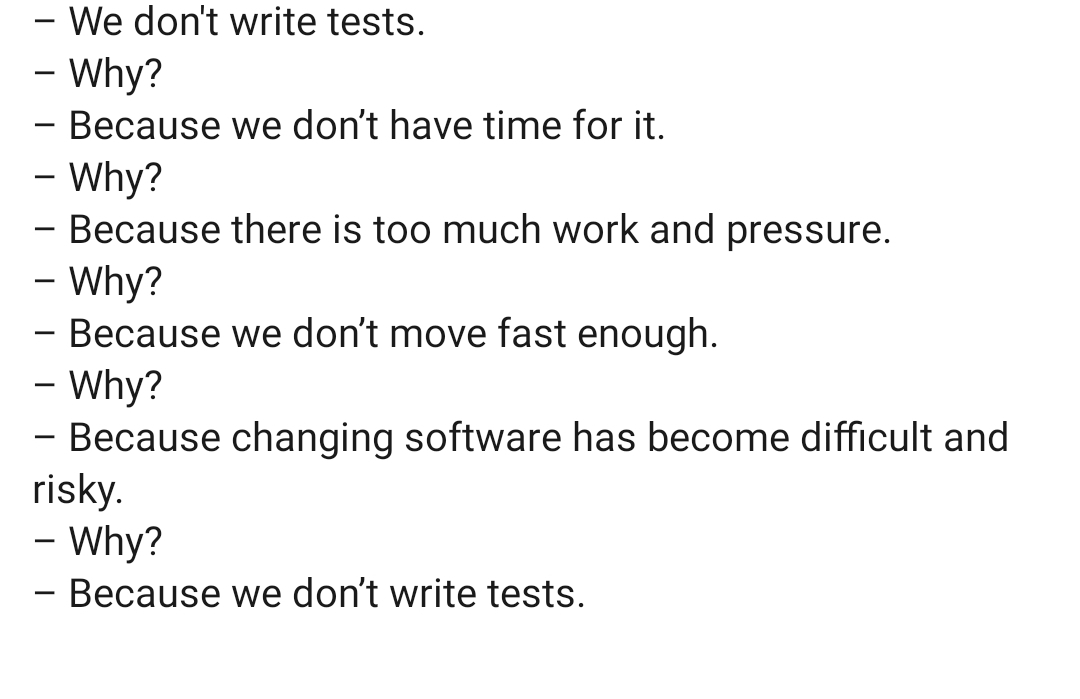

Let's be honest: testing in enterprise web applications is often minimal and ad-hoc.

The pervasive lack of automated tests is due to a perception that the effort in setting them up is not worth the return. The people who would be implementing the automated tests are therefore unable to make a compelling case for the client to invest in tests, so they don't. Let's reframe this belief by demonstrating that this is not true.

The Stack

The post was written with the following stack in mind, so some details may vary in your case:

- Sitecore XM running on Azure PaaS

- GitHub Actions (task runner agent)

- Next.js (head application)

- Vercel (hosting)

- Playwright (for testing)

Proposed Approach

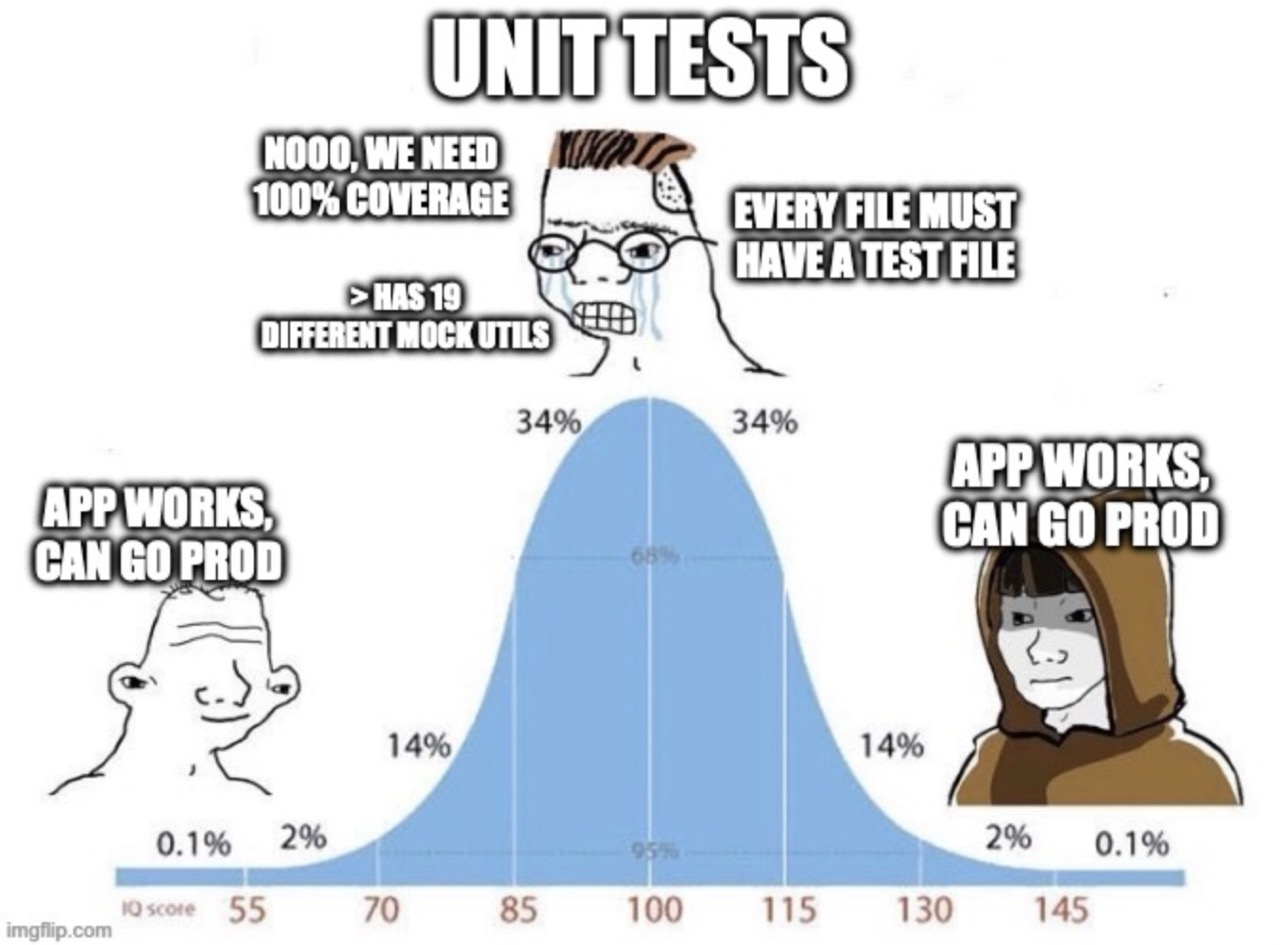

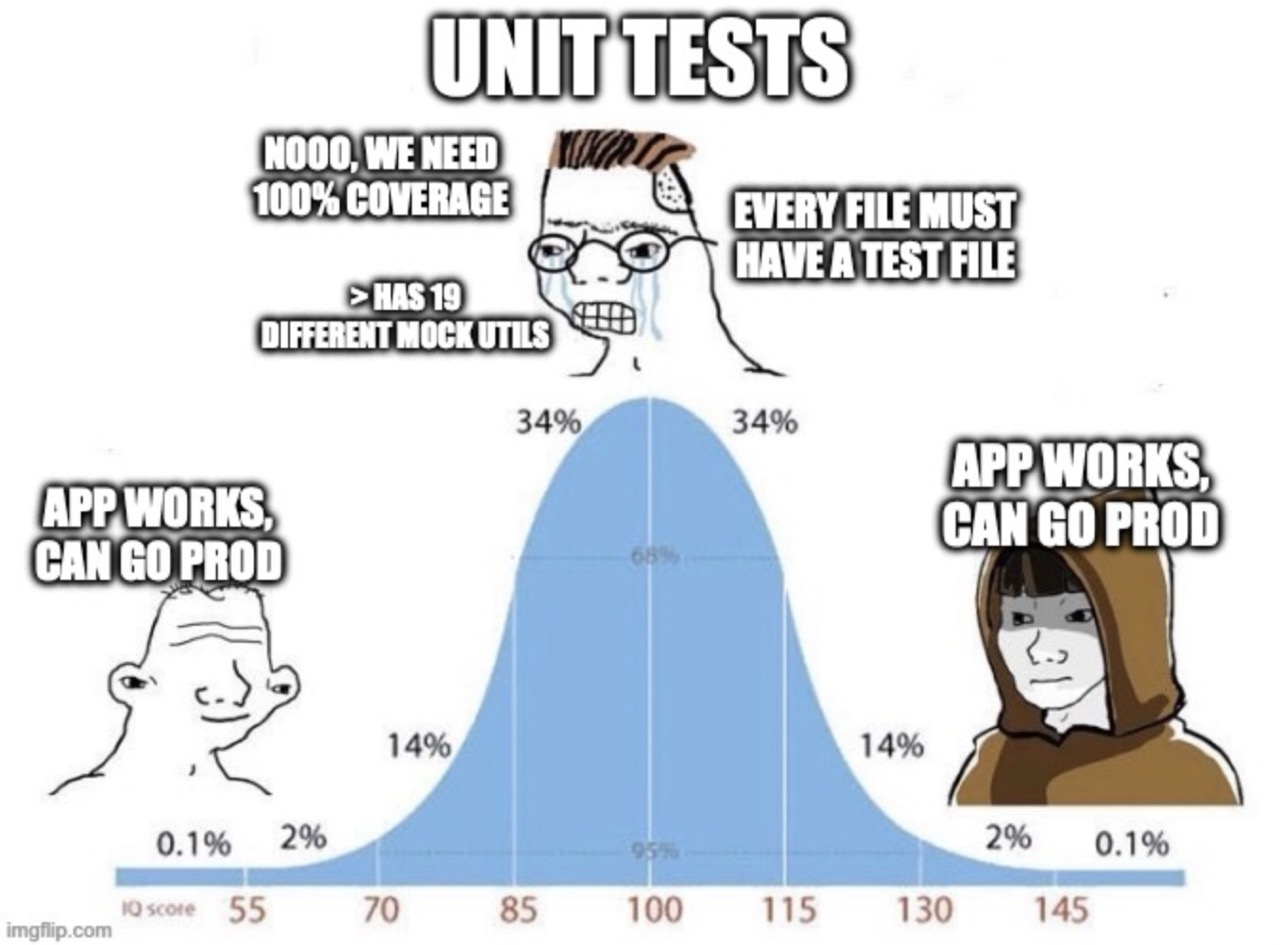

The 200 IQ take is that only a tiny amount of coverage can produce 99% of the results. A few simple but crucial tests, quickly implemented, is infinitely better than no tests at all. On a long enough timeline, something on the site is going to fail or regress, whether it be via human error or force majeure. My goal is to spare you the embarrassment of only finding out about the issue from an angry customer (which certainly has never happened to me and I'm offended that you would even conceive of the possibility).

Types of Unexpected Issues

Let's think through all the different types of unexpected issues that a website might experience on a long enough timeline:

- Net new content related issues which were not previously known or accounted for (often isolated but problematic); for example: a content author deselects all the items in a Multilist field and publishes the page but does not check it -- the component that references it begins throwing an error because the developer forgot to account for that scenario

- Regressions introduced by devs

- Third party dependency failures / outages

- Infrastructure issues (CDN cache problems, load balancer issues, database timeouts)

- Browser-specific front end issues

- Performance degradation (slow page / API loads, timeouts)

- Security issues (SSL cert expiration, CORS policy changes, CSP violations, authentication issues)

- Data / content issues such (publishing failures, asset 404s, formatting changes)

The Cost of Unexpected Issues

The cost of an unexpected issue depends on the nature of the issue, but ultimately the goal is the same: we are trying to prevent churn.

Let's assume that the scenario below arose because of a regression that was recently deployed by a developer:

- Client discovers issue

- Client emails you about it

- You drop everything you are doing to reply and investigate the issue

- You determine the root cause of the issue and notify the client

- You create a fix

- You test the fix

- You deploy the fix

- Etc.

How long the resolution process takes will range vastly, from 10 minutes all the way to days or even weeks, with effort and disruptions peppered consistently throughout (chronic churn).

Let's keep it simple and conservative and say that the cost is between 1 and 8 man hours.

Also, keep in mind the hidden cost of these issues: rack up enough of them, and your client may begin to distrust you, and you may develop a reputation of being a low quality partner.

The Cost of Setting Up Automated Tests

Since I've already done most of the hard work for you, you could probably repurpose and deploy the automated tests in less than a day, assuming you've got a modern and fast continuous deployment pipeline. This shouldn't take long. If it does, you're doing something wrong.

The Most Basic Tests

We're going to start with laughably basic tests:

- Is my global search returning results?

- Does my carousel component on

x page have a minimum of 3 items in it?

- Does my

x component contain something that I'm always expecting to be there?

- Do pages of type

x always have component y that all of them should have?

- Does my GraphQL endpoint return key information that I'm expecting?

The Approach

Hit the PROD site in a few key areas. Deliver the value right away. Then (optional), deploy down the stack where you have to contend with more access restrictions.

Considerations

Below are all of the interesting considerations I encountered while working on this:

- When the tests should run (cron, on-demand, pull request creation, successful Vercel builds)

- Preventing test driven web requests from showing up in server-side analytics (can't, but can request headers to be able to identify them)

- Failure notifications (who is notified in each failure scenario)

- Whether the test run on preview builds or production builds or both

- If and how tests should run after content publishing operations

- Branch requirements (should updates to a branch be contingent on the tests passing?)

- Blocking requests to analytics service such as Google Analytics, LinkedIn, etc.

- Reducing bandwidth consumption (no need to load images with simple text based tests)

- Preventing WAF access issues (ensuring that the agent doesn't get blocked)

- GitHub billing; each account has a limit to the amount of run minutes included in their plan. Enterprise plans come with 50,000 per month after which they are billed by usage -- that is more than plenty for basic tests. It's a tragedy if you are not using a good chunk of those minutes every month. Also keep in mind storage (often in the form of cache) limits.

- GraphQL endpoint URLs and API keys

Many of the above are already accounted for / mitigated in the example code, particularly around blocking unnecessary requests, minimizing bandwidth consumption, and minimizing Actions minutes consumption.

Example Code

Run npm install playwright, then add the following files to get the tests running.

_47import { defineConfig, PlaywrightTestConfig } from '@playwright/test';

_47import * as dotenv from 'dotenv';

_47const isCI: boolean = !!process.env.CI;

_47const config: PlaywrightTestConfig = {

_47 // Concurrency and retries

_47 workers: isCI ? 4 : undefined, // 4 on CI, default value on local

_47 retries: isCI ? 2 : 0, // Retry on CI only

_47 timeout: 60_000, // 60 seconds per test

_47 globalTimeout: 10 * 60_000, // 10 mins for entire run

_47 expect: { timeout: 10_000 }, // 10 seconds for expect() assertions

_47 // Fail build on CI if test.only is in the source code

_47 forbidOnly: !!process.env.CI,

_47 globalSetup: require.resolve('./global-playwright-setup'),

_47 // Shared settings for all tests

_47 baseURL: process.env.TEST_URL || 'https://www.YOUR_LIVE_SITE.com',

_47 // Helps us identify and allow the worker requests

_47 extraHTTPHeaders: { 'x-automation-test': 'playwright' },

_47 navigationTimeout: 30_000, // 30 seconds for page.goto / click nav

_47 actionTimeout: 30_000, // 30 seconds for each action

_47 trace: 'on-first-retry', // Collect trace when retrying the failed test

_47 use: { browserName: 'chromium' },

_47export default defineConfig(config);

global-playwright-setup.ts

_41import { chromium, LaunchOptions, BrowserContext } from '@playwright/test';

_41import { blockExternalHosts } from './src/util/block-external-hosts.js';

_41// Additional configurations for the playwright tests

_41 launchOptions?: LaunchOptions & {

_41 contextCreator?: (...args: any[]) => Promise<BrowserContext>;

_41export default async function globalSetup(config: Config): Promise<void> {

_41 for (const project of config.projects) {

_41 const original = project.use.launchOptions?.contextCreator;

_41 project.use.launchOptions = {

_41 ...project.use.launchOptions,

_41 contextCreator: async (...args: any[]): Promise<BrowserContext> => {

_41 const context = original

_41 ? await original(...args)

_41 : await chromium.launchPersistentContext('', {});

_41 await blockExternalHosts(context);

_41 // Abort all image / font requests -- we don't currently use them in our tests and we don't want to consume excessive bandwidth

_41 await context.route('**/*', route => {

_41 const req = route.request();

_41 if (['image', 'font'].includes(req.resourceType())) return route.abort();

src/util/block-requests.js

_56// Util for test suite to abort all network requests except those which are needed for fetching HTML

_56// This prevents the loading of analytics, ads, pixels, cookie consent, etc.

_56import { BrowserContext } from '@playwright/test';

_56export async function blockExternalHosts(context: BrowserContext, extraAllowedHosts: string[] = []): Promise<void> {

_56 // The domains that we expect Playwright to hit

_56 // Note that subdomains are automatically allowed

_56 const ALLOWED_HOSTS: string[] = [

_56 'www.YOUR_LIVE_SITE.com',

_56 'YOUR_LIVE_SITE.com',

_56 function hostIsAllowed(hostname: string): boolean {

_56 hostname = hostname.toLowerCase();

_56 return ALLOWED_HOSTS.some(allowed => {

_56 allowed = allowed.toLowerCase();

_56 hostname === allowed ||

_56 hostname.endsWith('.' + allowed) // subdomains

_56 // Intercept all requests

_56 await context.route('**/*', (route) => {

_56 const url = route.request().url();

_56 const isHttpScheme = /^https?:/i.test(url);

_56 return route.continue();

_56 const { hostname } = new URL(url);

_56 if (hostIsAllowed(hostname)) {

_56 return route.continue();

_56 // Modify global vars related to analytics before page scripts run to prevent tracking and console errors

_56 await context.addInitScript(() => {

_56 (window as any)['ga-disable-all'] = true;

_56 (window as any)['ga-disable'] = true;

_56 (window as any).lintrk = () => {};

_56 (window as any).clarity = () => {};

_56 (window as any).fbq = () => {};

Here we perform two types of tests:

- Ensuring that a GraphQL endpoint returns what we are expecting

- Ensuring that a number of pages contain the components we are expecting

tests/my-playwright-tests.ts

_95import { test, expect, Page } from '@playwright/test';

_95import { GraphQLClient, gql } from 'graphql-request';

_95test('SOME_PAGE returns article cards', async ({ page, baseURL }) => {

_95 const path = "/SOME_PAGE";

_95 console.log(`Testing article cards on ${baseURL + path}`)

_95 await page.goto(baseURL + path, { waitUntil: 'domcontentloaded' });

_95 const cardContainer = await page.locator('div[class*="articleDirectory_resultsContainer__"]');

_95 const ul = cardContainer.locator('ul[class*="cardGrid_cardGrid__"]');

_95 await expect(ul).toHaveCount(1);

_95 const cards = ul.locator('li div[class*="articleCard_card__"]');

_95 const count = await cards.count();

_95 await expect(count).toBeGreaterThanOrEqual(10);

_95test('locationPanel appears on office pages', async ({ page, baseURL }: { page: Page, baseURL: string }) => {

_95 const endpoint = process.env.GRAPHQL_ENDPOINT;

_95 const apiKey = process.env.SITECORE_API_KEY;

_95 if (!endpoint || !apiKey || !baseURL) throw new Error('Missing environment variables');

_95 const client = new GraphQLClient(endpoint, {

_95 headers: { sc_apikey: apiKey },

_95 item(path: "/sitecore/content/ACME/home/offices", language: "en") {

_95 query GetRendered($path: String!, $language: String!) {

_95 item(path: $path, language: $language) {

_95 const paths: string[] = [];

_95 for (const key of ['office'] as const) {

_95 const data = await client.request(queries[key]);

_95 const validPaths = (data?.item?.children?.results ?? [])

_95 .filter((r: any) => r?.url?.path)

_95 .map((r: any) => r.url.path);

_95 paths.push(...validPaths);

_95 const testPaths: string[] = [];

_95 for (const path of paths) {

_95 const fullPath = '/sitecore/content/ACME/home' + path;

_95 const data = await client.request(queries.render, { path: fullPath, language: 'en' });

_95 const renderings = data?.item?.rendered?.sitecore?.route?.placeholders?.['jss-main'] ?? [];

_95 if (renderings.some((r: any) => r.componentName === 'locationPanel')) {

_95 testPaths.push(path);

_95 console.warn(`Skipping ${path}: ${e.message}`);

_95 if (!testPaths.length) throw new Error('No pages found with locationPanel component');

_95 for (const path of testPaths) {

_95 await test.step(`Visiting ${path}`, async () => {

_95 await page.goto(baseURL + path, { waitUntil: 'domcontentloaded' });

_95 console.log(`Testing locations component on ${baseURL + path}`);

_95 const ourLocationsContainer = await page.locator('section[class*="ourLocations_container__"]');

_95 await expect(ourLocationsContainer).toBeVisible();

_95 const ourLocationsList = ourLocationsContainer.locator('ul[class*="carousel_carousel__"]');

_95 await expect(ourLocationsList).toHaveCount(1);

_95 const ourLocationsCards = ourLocationsList.locator('li div[class*="ourLocations_card__"]');

_95 const ourLocationsCount = await ourLocationsCards.count();

_95 await expect(ourLocationsCount).toBeGreaterThanOrEqual(1);

Once all of the playwright tests are in place and confirmed working via npx playwright test, we can add the GitHub Action workflow.

These are the instructions for the GitHub actions agent.

In order to start running Actions in the GitHub UI, you need to merge this file into your main branch. Then it will show up in the GitHub UI. Else, you can run this locally on demand.

.github/workflows/run-front-end-tests.yml

_53name: Run Front End Tests

_53 workflow_dispatch: # Allows manual runs from GitHub UI

_53 schedule: # Run nightly at 00:00 AM UTC

_53 # repository_dispatch: # Run when a Vercel deployment succeeds

_53 # types: [vercel.deployment.success]

_53 contents: read # Ensure caching permissions

_53 # if: github.event_name != 'repository_dispatch' || github.event.client_payload.target == 'preview'

_53 concurrency: # Automatically cancel any running builds from same ref

_53 group: e2e-${{ github.ref }}

_53 cancel-in-progress: true

_53 runs-on: ubuntu-latest

_53 - name: Checkout commit

_53 uses: actions/checkout@v4

_53 # Use the exact commit that Vercel built, use current for manual/cron

_53 ref: ${{ github.event.client_payload.git.sha || github.sha }}

_53 - name: Set up Node.js

_53 uses: actions/setup-node@v4

_53 cache-dependency-path: package-lock.json

_53 - name: Restore Playwright browsers

_53 uses: actions/cache@v4

_53 path: ~/.cache/ms-playwright # Playwright binaries location

_53 key: pw-${{ runner.os }}-${{ hashFiles('**/package-lock.json') }}

_53 - name: Install dependencies

_53 run: npm ci --no-audit --prefer-offline --progress=false

_53 - name: Install Chromium if Cache Missed

_53 if: steps.pw-cache.outputs.cache-hit != 'true'

_53 run: npx playwright install chromium --with-deps

_53 - name: Run Playwright tests

_53 echo "Running tests against: $TEST_URL"

_53 # Use the Vercel preview url, use PROD for manual/cron

_53 TEST_URL: ${{ github.event.client_payload.url || 'https://www.YOUR_LIVE_SITE.com' }}

_53 GRAPHQL_ENDPOINT: ${{ secrets.GRAPHQL_ENDPOINT }}

_53 SITECORE_API_KEY: ${{ secrets.SITECORE_API_KEY }}

Future Considerations

Once a few basic tests are in place, you can explore other interesting cases such as:

- Does my load if ad blockers are enabled?

- Does my site look fine when browser dark mode is enabled?

Despite those being more advanced test cases, you can take the same approach of determining the simplest way to test them.

Playwright can also be used to take screenshots, which means you can do visual regression testing.

And remember the struggle:

-MG