Headless Cloud Infrastructure for JSS: An Overview

The answer to how one should architect cloud infrastructure for a JSS site is, as usual, "it depends". In practice, how one actually gets a JSS site deployed in the cloud is probably by "doing and using what I'm already familiar with", which translates to: "setting up JSS in the cloud the same way I have it on my local".

Integrated Topology

One of Sitecore JSS's best features is that it splits out the responsibilities of a web server so it can be scaled horizontally and customized for advanced client-side routing scenarios. Here's how you wouldn't take advantage of that: by having your Node.js SSR (server side rendering) running on the same box as your content delivery server.

This is also known as the "Integrated" topology (docs):

In an integrated topology, the Sitecore CD servers perform the server-side rendering of the JSS app using their own integrated (same server, out of process pool) Node.js services. This mode is less flexible in terms of scalability than a headless deployment, especially with regards to CDN integrations and the capability to scale API servers separately from SSR instances. In this mode, API hosting and rendering are performed on the same server.

There is also less flexibility to do advanced client-side routing scenarios when using integrated topology, as the requests are still pre-parsed by Sitecore and subject to Sitecore routing. In headless mode, you can run custom Express middleware to customize the server extensively. In integrated mode, similar customizations must be done in Sitecore (C#) instead.

In other words, the Integrated topology is simple, and it might work fine for your use case in the beginning, however, if you want to experience the true nirvana that comes with JSS, you'll want to set it up in the Headless galaxy brain way that Sitecore recommends.

Headless Topology

Let's graduate out of monolithic content delivery architecture.

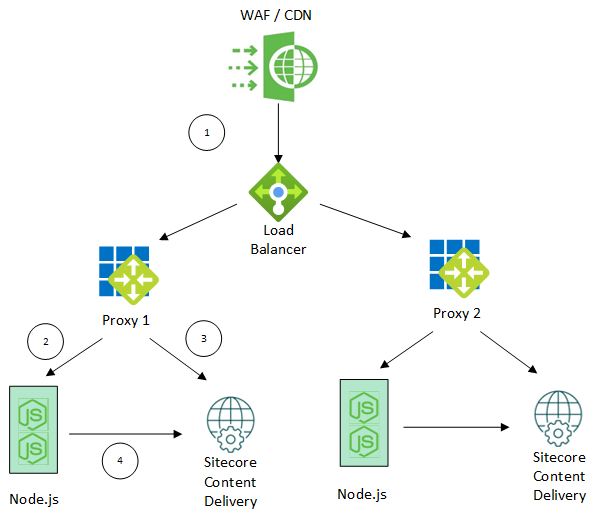

Here's what we'll be showing (docs):

When running in headless mode, the Sitecore Content Delivery (CD) servers do not directly serve the public website. Instead, a cluster of inexpensive Node.js servers hosts the public-facing website. These Node servers run the node-headless-ssr-proxy. This server-side rendering (SSR) proxy makes requests to APIs running on the Sitecore CD servers and then renders the JSS application to HTML before returning it to the client. These SSR proxies can be hosted anywhere Node.js can run.

In the headless topology, you can use private Sitecore CD servers. The SSR proxies can also reverse-proxy specific paths, such as API calls and media library requests, to the Sitecore CDs. In this setup, the JSS app uses the proxy URL as its API server (that is

https://www.mysite.com), and the SSR proxy proxies tohttps://sitecore-cds.mysite.com.

The Infrastructure

How does one architect the infrastructure? One solid high-level approach (with a bias towards Azure in this case) is:

A few notes:

- The Sitecore Content Delivery servers could be virtual machines, Azure App Services, a Kubernetes cluster, Docker, etc.

- The Proxies are simply a mechanism by which requests can be routed to the appropriate resources.

1 - WAF / CDN / Load Balancer

The Web Application Firewall (WAF), Content Delivery Network (CDN), and the Load Balancer (LB) will make up the front line of your infrastructure. This is the public entry point. If you're not using these as part of your infrastructure, turn 360 degrees, and walk away. If you think you don't need a WAF, think again.

- A solid WAF setup can be had for free (Cloudflare is great)

- Keeps your infrastructure secure

- Reduces noise in your logs

2 - IIS Proxy

In this step, the Proxy routes requests to the Node.js app endpoint.

In an Azure PaaS setting, the Node.js app would be an App Service Slot (which allows for rolling deployments).

The Proxy is also responsible for pinning Node slots and Content Delivery requests together, which is relevant for our deployment.

Thanks to the pinning that takes place here, we can be sure that any callbacks between the Node app and the Content Delivery instance are bound together instead of potentially bouncing between different Node and CD instances.

3 - Path-Based Routing

For specific requests containing paths that are not relevant to the Node app, path-based routing will be used for routing to the Sitecore media, virtual directory, or Sitecore API routes.

Example Rewrite Regular Expressions:

- ^-/media/.*

- ^-/jssmedia/.*

- ^sitecore/api/.*

- ^virtual_directory_path/.*

4 - Node App

In this step, the Node app fetches layout and content information from the CD instance.

Conclusion

Today you've seen a high level overview of a solid headless infrastructure configuration. If you need help with your cloud infrastructure, do reach out to us @ One North!

Cheers,

Marcel