Your Sitecore Site is Being Farmed to Train AI

Index

NEW POST, a followup on my previous post titled Your Sitecore Site is Being Farmed to Train AI. This post is a continuation of that post, and is intended to provide more context and guidance for Sitecore implementation partners and their clients. UPDATE (JULY 7, 2024): Cloudflare recently released new AI bot scraping functionality: https://blog.cloudflare.com/declaring-your-aindependence-block-ai-bots-scrapers-and-crawlers-with-a-single-click

https://rknight.me/blog/perplexity-ai-is-lying-about-its-user-agent/

Overview

Sitecore is used by many large and reputable organizations: banks, insurance companies, government agencies, not for profits, law firms, etc. These are some of the most reputable and trusted sites on the internet, and this makes them a prime target for web scraping and data mining for training AIs. They are large, complex, and have a lot of data. They are also often not as well protected as they should be. Many Sitecore sites have effectively gifted all of their data to AI companies.

And now:

Chamber of Progress, a tech-industry lobbying group whose members include Apple, Meta and Amazon, has launched a campaign to defend the practice of using copyrighted works to train AI.

This emerging paradigm calls many existing tools, processes, jobs, and companies into question, and renders many obsolete. This also creates endless new opportunities for those who can adapt.

In this post, I will discuss some of the implications of this new paradigm for implementation partners and their clients.

The Discovery

This hit close to home when I was looking through some IIS logs and found Anthropic's "ClaudeBot" making over 325,000 requests to a Sitecore site in a single day. They weren't even subtle about it; they were crawling the site at around 10 requests per second for more than 8 hours straight, and the requests seemed to be focused on content that was uniquely dense and valuable. This was not a simple "crawl the site and move on" operation; this was a targeted data mining operation.

I looked further back in time and saw that the requests started in November of 2023 and have been happening consistently and more comprehensively ever since.

Anthropic isn't the only one. OpenAI's crawler has been crawling the site as well, and started doing so around the same time as Anthropic.

I started thinking about the implications of this, specifically with regards to all of my Sitecore clients. As Sitecore implementation partners, we are in a unique position in understanding how our clients' business models might be in need of serious re-evaluation. We have a duty to take the lead in guiding our clients through this new reality. Their survival might depend on it.

A Thought Experiment

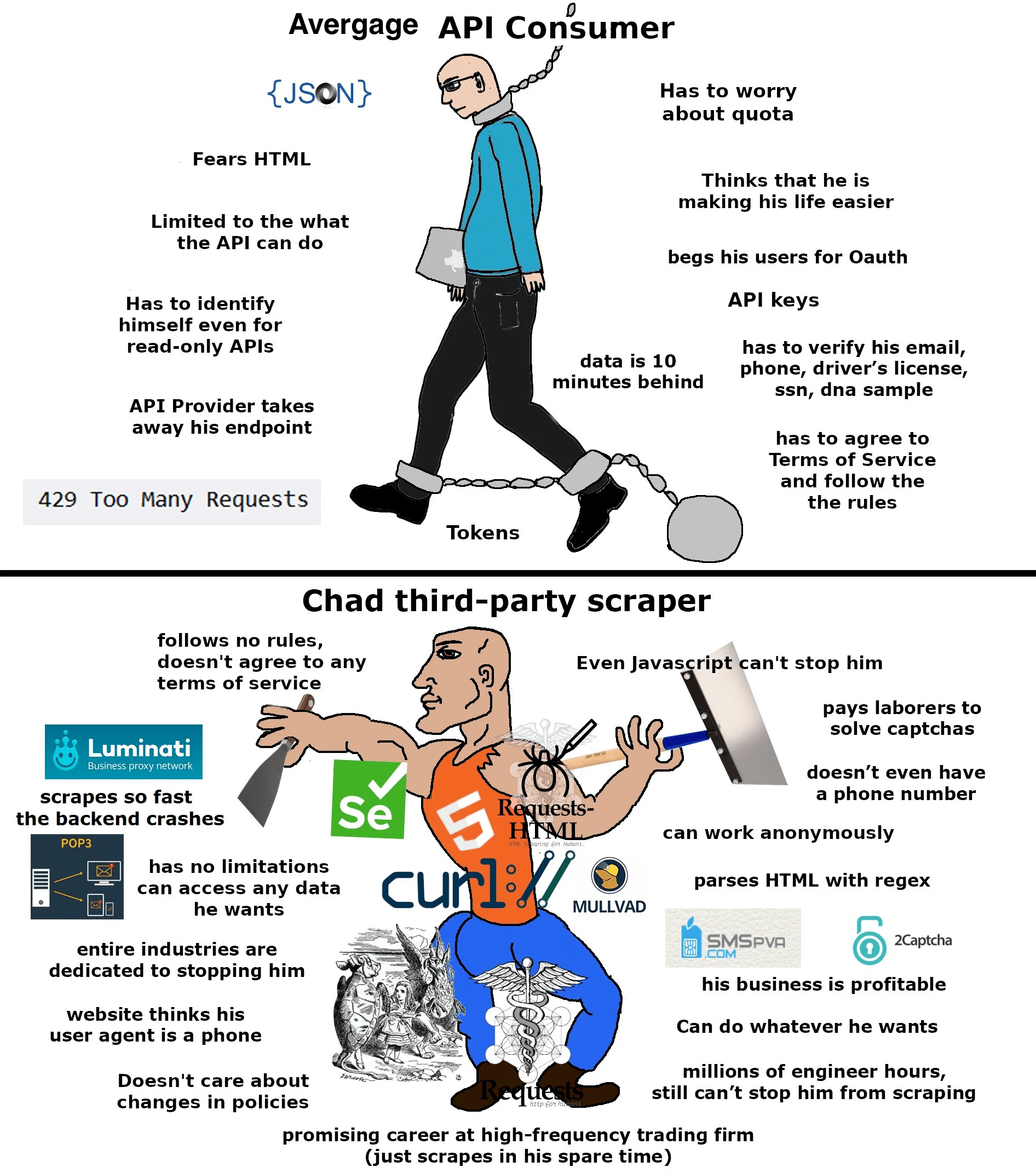

Imagine that you are trying to build artificial general intelligence (AGI). You are very well funded. You have a team of engineers and data scientists who are working around the clock. You are willing to do whatever it takes to build the best training dataset in the world.

You believe that achieving AGI is the most important thing that you can do for humanity. You are therefore willing to break some rules and conventions to make it happen, because the reward far outweighs the risks.

What would you do? Perhaps you would:

- Mine data from sites as much as possible and as quickly as possible before they implement controls to stop you or slow you down

- Create AI agents that browse the web like a human would, passing through account registrations, login screens, forms, captchas, and other barriers which block your access to more data

- Use AI to make your crawler super efficient and advanced and able to adjust crawling strategy at any time

- Make your crawler appear as human-like as possible to avoid detection

- Ignore robots.txt and other rules that sites have set up to prevent you from scraping their data

- Use AI to discover security bypasses and vulnerabilities in websites to get more data

- Buy data from data aggregators

- Buy data from the dark web / hackers

- Hack into servers to get data

Now imagine you are a black hat hacker with the goal of using AI to make ill-gotten gains. You are willing to break any law or rule, and AI empowers you more than ever.

On the flip side, imagine you are the CEO of a reputable organization with valuable data on your site. What would it mean for your organization if an AGI was trained on all of your data, and that the AGI could recite and reason about all of your data faster, more accurately, and less expensively than any human could, all via a simple and cheap API call? For many organizations, this requires a rethink of their business model, and fast.

Notable Content Subjects

Certain subject areas get special treatment in the world of search and AI. These are areas where good information is particularly valuable because the stakes are high, and where errors / omissions can have serious consequences. At a high level they are:

- Health and medicine

- Personal finance

- Legal

- Safety and security

- Education

- News and current events

- Employment and careers

- Housing and real estate

- Travel

If your organization falls into one of these categories, understand that your data is particularly valuable, and that your future business model should leverage your data.

This article put it well:

[OpenAI's chief architect] urged companies to differentiate by using language AI APIs and creating unique user experiences, data approaches and model customizations.

... the key differentiator for businesses building language model-powered services is leveraging your own proprietary data.

“The user experience you create, the data you bring to the model and how you customize it and the like service that you expose to the model, that is actually where you folks are going to differentiate and build something like genuinely unique,” Jarvis said. “If you just build a wrapper around one of these very useful models, then you're no different than your competitors.”

The Everything API

Chat-based AI systems are effectively API endpoints which perform arbitrary tasks using plain language on a pay-as-you-go basis. One of the most fascinating realizations for me was that chat based AI systems turn any arbitrary content (such as the entire web itself) into a fully customizable API. In this new world, EVERYTHING is an API; websites, PDFs, images, videos, etc.

Further, the outputs can be formatted in any desired data structure such as JSON, XML, etc, which makes it easy to integrate with any system. All of this can be done very quickly with minimal technical knowledge.

This means that your Sitecore site is now also an API.

The Changing Role of DXP Implementation Partners

There has been much talk of implementation partners migrating their clients to the cloud, going headless / composable, and so on; however, much of this is just a rehash of the same old stuff:

- Distributed / composable vs monolithic

- Reliability

- Scalability

- Performance

- Cost reduction

- Revenue generation

- Risk mitigation

- Security

All of the above are important, but they are not new. The new stuff is how to operate and market in a world where AI is the new normal.

A significant source of work and revenue for implementation partners is going to be:

- Making client data discoverable and queryable by AI systems

- Training proprietary AI on proprietary data

- Data protection and security

- Discovery and implementation of AI tools for both internal and external purposes such as translation, content generation, search, etc.

- General consultation for how to navigate the new AI-driven world

- Integration between systems

- Cost reduction using AI

- Revenue growth using AI

All of which is to say that implementation partners, just like their clients, will need to adapt to the new AI-driven world.

Takeaways

- Assume that web crawlers are AI-driven. They are not just simple bots that crawl the web and index pages. They are AI systems that are trained to understand and reason about the data that they are collecting. They are able to make inferences and draw conclusions from the data that they collect. They are able to learn from the data that and improve their performance over time.

- Assume that web crawlers may not respect the rules that you have set up for them.

- Ask your web team if they are monitoring and managing these crawlers.

- Implement WAF, bot detection, rate limiting, and monitoring.

- If your WAF is showing periods of increased traffic, investigate it, even if it is not causing any issues.

- Monitor logs for unusual activity, using AI where appropriate.

- Monitor your bandwidth usage.

- Consider developing a technical and legal framework around the use of your data.

- Think about how your organization will need to evolve in a world where AI is the new normal.

- Think about training your own AI on your data.

- Think hard about what aspects of your business cannot be made obsolete by AI, or in fact would be improved by AI augmentation. Lean into those.

Stay.... Intelligent.

-MG